Day 29: Building Production-Ready Task Queues with Celery

Working Code Demo:

What We're Building Today

Today we're implementing a robust task queue system using Celery with Redis as our message broker. This foundation will handle async operations like metric processing, notifications, and report generation - the backbone of any scalable system.

Key Components We'll Implement:

Celery worker configuration with Redis broker

Task queue organization and routing

Worker management and scaling

Real-time task monitoring dashboard

Automatic retry mechanisms with exponential backoff

Why Task Queues Matter in Real Systems

Think about Instagram processing millions of photo uploads or Slack delivering messages instantly. Behind the scenes, task queues handle these operations asynchronously, preventing user-facing requests from blocking on heavy processing.

Task queues transform your monolithic application into a distributed system where work gets processed efficiently across multiple workers, providing horizontal scalability and fault tolerance.

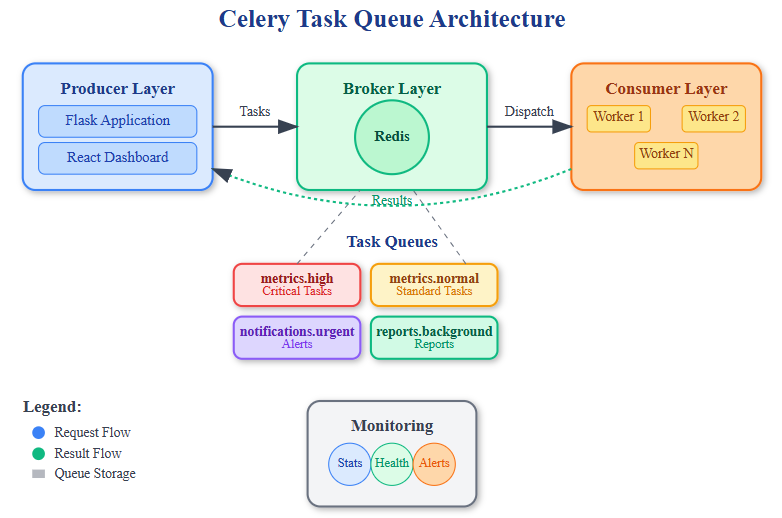

Component Architecture

Our Celery setup consists of three main layers:

Producer Layer: Your Flask application creates tasks and sends them to Redis queues. Each task contains the function name, arguments, and metadata like retry policies.

Broker Layer: Redis acts as the message broker, storing tasks in different queues based on priority and routing keys. It also maintains task state and result storage.

Consumer Layer: Celery workers constantly poll Redis for new tasks, execute them, and update their status. Workers can be scaled horizontally across multiple machines.